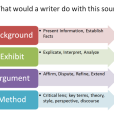

I’m working with a colleague of mine (Amy Hofer of Threshold Concepts fame) to create a suite of tutorials that are going to be integrated into online University Studies (think General Education) classes. One of the learning objects we plan to create is envisioned as being called “good for what?” Students tend to look at sources very much in black and white; a source is either good or bad. And they usually want a librarian or their instructor to tell them definitively whether a source they found is good or bad. But we all know it’s not so simple. Throughout our suite of tutorials, we plan to talk more about determining what kinds of evidence you need to answer your question (this article on the BEAM model as well as Barbara Fister’s and Kate Ganski’s excellent posts on the are great places to start if you want to question your own teaching of “sources”) than what types of sources are good, and this tutorial will use concrete examples to get students thinking about whether something is good or bad in the context of their own research question and the sorts of evidence they need to make a case. It’s all about context, right?

Ironically, I think librarians sometimes don’t take context enough into account when creating information literacy learning objects. Like I wrote about in my post about Library DIY, I think many libraries first developed tutorials based on the way we teach in the classroom, when most tutorials are actually meant to be used at a students’ point of need. A tutorial designed to help a student at their point of need should look a lot more like a reference desk interaction than classroom instruction. However, that doesn’t mean that learning objects based on the way we teach in the classroom are bad. They’re absolutely fine when assigned to students in a class as part of their learning in a class. In online classes that do not have synchronous components, this may be the only way for a librarian to provide instruction that is course-integrated. And when well-integrated, with discussion or activities or assessment attached to the viewing of a tutorial, it can be a very effective learning tool.

Just like with the black-and-white thinking students usually exhibit about sources being good or bad, I think we often view methods of tutorial creation as good or bad. Really, we would also be evaluating technologies and methods for tutorial creation based on the individual context. I remember when screencasting software, like Adobe Captivate and TechSmith’s Camtasia, first started being used in libraries. Between 2004 and 2006, there was extreme enthusiasm for screencasting and I think people saw it as the magic bullet for creating more engaging tutorials and more effective learning. Why create a boring tutorial with text and screenshots when you can concretely show someone every step of how it’s done in a video? Many librarians abandoned their dull HTML tutorials and created screencasts for using databases, downloading eBooks, and more.

Over time, we learned that maybe screencasting wasn’t actually good for everything. The first time a database interface changed shortly after you created a screencast on it was pretty painful right? In many cases, you have to start nearly from scratch when an interface changes, whereas with an HTML tutorial, you just change a few screenshots and text and you’re good to go. Also, some began questioning whether screencasts were good for students learning how to use tools like our databases. Lori Mestre’ work has been very influential for me (see “Student preference for tutorial design: a usability study” and “Matching up learning styles with learning objects: what’s effective?”) and I’m surprised that I haven’t seen more studies aimed at comparing the efficacy of (or even student preferences for) different types of tutorials. Mestre found that most students prefer HTML tutorials with screenshots that they can easily refer back to and that they were better able to complete tasks when using HTML tutorials than screencasts.

For my part, I do not use screencasting to teach people how to use tools. I’m fairly convinced, based on Mestre’s research and my own experiences, that students trying to learn how to use a database need something they can easily refer back to, and video isn’t necessarily great for that. Also, the long-term maintenance burden on a database tutorial is extreme. I do, however, use screencasting to teach ideas and concepts, like brainstorming keywords, developing a search strategy, or determining what evidence you need to answer your research question. In those cases, I think they are far more engaging than anything I could create using text and screenshots, and since they’re more about learning ideas rather than tools, they only need to get the gist of what I’m saying. Also, information literacy concepts don’t change as rapidly as interfaces do. However, I still like to provide a text transcript (as well as captioning within the video itself; I hope you’re all doing that!) for those who would prefer to just read the words.

While Guide on the Side has been getting a lot of well-deserved positive buzz (much of it from me!), I also don’t see it as a magic bullet. If you don’t already know about this open source tutorial creation software, take a look at my column on it here. After getting my hands dirty with it last summer, I can definitely say that it’s exceedingly difficult to create a good Guide on the Side, because it’s meant to provide guidance while the student is using the tool/database. You therefore need to anticipate issues students might have and provide appropriate guidance rather than being directive. The Guides on the Side that I first created were more directive — telling students to click here and do this and then answer a question — and I really didn’t feel like this was a great use of the software. I recently read an interesting critique of Guide on the Side for creating a split attention effect. While the post very accurately describes how to fix split attention issues in tutorials, I don’t think her critique of Guide on the Side is accurate. If Guide on the Side was designed to be a tutorial with text on the side, it would definitely be a good example of the problems with split-attention effect. But the tutorial is the stuff on the side. The student is actually using the database in the main part of the page with guidance in the left-hand pane. It’s like a better version of having an HTML tutorial to refer back to, because instead of moving between browser windows, the instructional content is in the same window you’re using for searching a database. However, I do agree that it would be really cool to have something like guide on the side, but with pop up bubble tips right beside the parts of the database you’re actually using (anyone else remember pop-up video?). That is actually mostly possible with Adobe Captivate, but I rarely see anyone creating tutorials that use the interactive components of Captivate, where you can have people actually click on things and get feedback. There are also accessibility issues with those features, which is why I don’t use them.

For our University Studies tutorials, Amy and I are creating five modules that faculty can choose to incorporate into their courses: using the library, developing a strategy/getting started, finding evidence, evaluating evidence, and using evidence. They’re going to contain a mix of videos, text and screenshots, and interactive components where students answer questions related to their own research. We’d hoped to have each module be developed in such a way where students would receive a badge for completing it so that if it was assigned in another class, they could just indicate they’ve done it and skip the assignment. Given that we have such a huge population of transfer students (2/3 of our students transfer in) we thought this would help get everyone on a more level playing field with research without faculty being forced to always teach to the lowest common denominiator. In my conversations with faculty teaching in the University Studies sophomore inquiry program, this was mentioned as a frequent frustration. We even wanted to have a way for students to test out of each module if they already came in with strong research skills. However, it’s looking like our technology support for this initiative will not be what we’d hoped, so for now, we’re thinking of using Qualtrics as the backbone of our tutorials. It’s a pretty novel use of the survey software, but it seems a far better medium than LibGuides for sequential content. You can embed videos and screenshots into a Qualtrics survey, you can have multiple pages, you can have students answer questions and eveb see different content based on their answers, and you can have quiz questions that provide feedback to the student if they get a question wrong. I’ve seen people try to make sequential tutorials work in LibGuides and it just seems like a mess. I understand why people use it (it’s probably the only CMS they have access to), but it’s a terrible medium for most instructional content; especially a sequential tutorial.

When I create tutorials, I think about the following questions and the answers guide my design and technology decisions:

1. What are your learning outcomes? What should students be able to do once they’ve gone through this tutorial?

2. Who is your population? Is it everyone at your institution or for a specific class or population? Think about what you know about that population. Is your tutorial accessible (an unaccessible tutorial is really not even an option anymore)? Culturally sensitive? Appropriate for international students and others for whom English is not their first language?

3. How will students use this? Will they be using it in a class or is it meant for them to get to at their point of need? If it’s for a class, will there be activities/discussions/assessments tied to the tutorial in class so students can practice what they’ve learned or should you build that into the tutorial itself? You can’t have a tutorial that meets both needs (classroom and point-of-need) really well and your decision on this will impact the length and overall design of the learning object. While people say a learning object for point-of-need should be no longer than three minutes (and likely less), that rule does not always apply to a course-integrated tutorial.

4. What is the universe of technologies available to me to create this? And which will best work for the population, learning outcomes, and use cases above? Be willing to think outside of the box with this (Qualtrics is definitely an example of out-of-the-box thinking). What will the long-term maintenance burden look like with each technology available to me?

I’ve been creating online tutorials since 2004 and I still find that my thinking about pedagogy, technologies, and instructional design best practice is changing on at least an annual basis. What I know for certain is that considering context is key in designing effective online tutorials.

What advice would you offer to someone creating online tutorials for the first time? What do you wish you’d known when you first got started? What questions do you ask yourself before creating an online learning object? What online tutorial or learning object have you created that you’re most proud of? We’re all learning, experimenting, and trying to do what’s best for our students. And the more we share our own experiences, observations, and research, the better equipped we’ll be to positively impact student learning.

Image: Addie Model, by CSU Chico IDTS

Qualtrics does all that, huh? I have been looking for an alternative to the sorry survey and quiz tools in our course-management system. Campus has a Qualtrics license, so I may just have found it! Thank you, Meredith; you just solved a vexing problem in one of my continuing-education courses.

Woo hoo! Glad to hear it Dorothea. It is very sophisticated software and I’ve been using it for all sorts of crazy purposes since I learned about its existence. I believe you can embed surveys into web pages, so you could even embed the quizzes or surveys in the LMS.

BTW, here’s a link to a page on using Qualtrics for quizzes http://www.uwec.edu/help/Qualtrics/quiz.htm

Pingback: Good for what? | Fortbildung in Bibliotheken

We are designing something similar here, but are using Blackboard to house the content. Once we get the new service pack, we’ll have the ability for students to earn badges once they complete modules (we are still testing the assessments to make sure they must achieve something rather than just complete to earn a badge). Did you consider using your CMS and what reasons made you shy away from it?

Hi Trisha! We wanted very much to use our LMS, but the people in charge of it decided to stop paying for the Learning Objects Repository (LOR) around a year ago. So there’s no easy way to integrate the content into multiple classes without building it into each one or having a separate library course (the latter of which, IMHO, is the kiss of death). With Qualtrics, we can provide embed code so faculty could easily embed the module directly into their classroom, so for the students, at least, it’ll be seamless. If we had an LOR, using D2L would have been a no-brainer.

I’m hoping that we will get the LOR back in the future and can pursue that (especially since D2L is starting to integrate badge support). It would be very easy to get the content out of Qualtrics and into the LMS if that ever happens.

Pingback: Learning Wordpress « Digital-Hobo.com

Pingback: Good for what? Considering context in building ...

Pingback: 50 Library Stories You May Have Missed in August - OEDB.org

Pingback: Good for what? Considering context in building ...

Pingback: Cheating on This Blog | Digital Carrie

Pingback: Good for what? Considering context in building ...

Pingback: Good for what? Considering context in building ...